Mathematicians like to think they are rigorous. Ha! If you haven't tried to cast your equations into a working computer program I can guarantee you that you left something out. Mathematicians like to omit ‘unnecessary’ detail. I'm a fan of abstraction, but you have to use it in a disciplined manner. If you simply discard the boring parts of the notation, you'll end up with an ambiguous mess. Of course humans can handle ambiguity more gracefully than computers, but it seems to me that if a computer cannot make sense out of what is being expressed, then a poor human like me has very little chance of success.

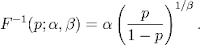

So here's my current frustration: the distribution I've been studying has an interesting quantile function.

And I'm already in trouble. This notation has some extra baggage. Let me recast this as a program.

And I'm already in trouble. This notation has some extra baggage. Let me recast this as a program.

(define (make-quantile-function alpha beta)

(lambda (p)

(* alpha (expt (/ p (- 1 p)) (/ 1 beta)))))

alpha and beta are tuning parameters for this kind of distribution. alpha is essentially the median of the distribution, beta determines whether the curve descends steeply or has a long tail.

The quantile function takes an argument p which is a number between 0 and 1. If you give it an argument of .9, it will return the value of the 90th percentile (that value of X where 90 percent of the distribution is below X). If you give it an argument of .5, you'll get the median. If you give it argument of .25, you'll get the first quartile, etc. It's a useful way of describing a distribution.

The reason the fancy equation had a superscript of -1 is because the quantile function is the inverse of the cumulative distribution function. The reason the function was named F-1 is because they named the cumulative distribution function F. The cumulative distribution function could be defined like this:

(define (make-cumulative-distribution-function alpha beta)

(lambda (x)

(/ 1 (+ 1 (expt (/ x alpha) (- beta))))))

We can show that these functions are inverses of each other:

(define q (make-quantile-function .9 1.3)) ;Value: q (define cdf (make-cumulative-distribution-function .9 1.3)) ;Value: cdf (q (cdf 3)) ;Value: 3.000000000000001 (q (cdf 1.8736214)) ;Value: 1.8736213999999998 (cdf (q .2)) ;Value: .19999999999999996 (cdf (q .134)) ;Value: .13399999999999995I mentioned earlier that I thought the quantile function was particularly interesting. If you've read my past posts, you know that I think you should work in log-odds space whenever you are working with probability. The quantile function maps a probability to the point in the distribution that gives that probability. The 90th percentile is where 9 times out of 10, your latency is at least as good, if not better. So what if we convert the quantile function to a `logile' function? In other words, take the log-odds as an argument.

(define (log-odds->probability lo)

(odds->probability (exp lo)))

(define (odds->probability o)

(/ o (+ o 1)))

(define (quantile->logile q)

(lambda (lo)

(q (log-odds->probability lo))))

(define (make-logile-function alpha beta)

(quantile->logile

(lambda (p)

(* alpha (expt (/ p (- 1 p)) (/ 1 beta))))))

(define logile (make-logile-function .9 1.3))

(logile 3)

;Value: 9.046082508750782

(logile 1)

;Value: 1.9422949805536014

(logile 0)

;Value: .9

(logile -3)

;Value: .0895415224453726

But let's simplify that computation:

(quantile->logile

(lambda (p)

(* alpha (expt (/ p (- 1 p)) (/ 1 beta)))))

((lambda (p)

(* alpha (expt (/ p (- 1 p)) (/ 1 beta))))

(log-odds->probability lo))

(* alpha (expt (/ (log-odds->probability lo)

(- 1 (log-odds->probability lo)))

(/ 1 beta)))

(* alpha (expt (/ (odds->probability (exp lo))

(- 1 (odds->probability (exp lo))))

(/ 1 beta)))

(* alpha (expt (/ (/ (exp lo) (+ (exp lo) 1))

(- 1 (/ (exp lo) (+ (exp lo) 1))))

(/ 1 beta)))

;; after a lot of simplification

(* alpha (exp (/ lo beta)))

(define (make-logile-function alpha beta)

(* alpha (exp (/ lo beta))))

My ‘logile’ function is even simpler than the ‘quantile’ function. Now if I want to express the answers in log space as well, I simply take the log of the logile function:

(log (* alpha (exp (/ lo beta)))) (+ (log alpha) (/ lo beta))Which you will recognize is the equation of a line.

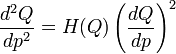

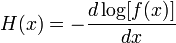

Well so what? With sufficient algebra, any function that has two independent parameters can be turned into a line. However, the cumulative distribution function is an integral, so I have an intuition that there is something interesting going on here. I found some work by Steinbrecher and Shaw where they point out that “Quantile functions may also be characterized as solutions of non-linear ordinary differential equations.” Obviously, the logile function can also be characterized this way, too. I'm hoping that looking at the distribution in this way can yield some insight about the distribution.

And this is where I run into the annoying math. Check out these two equations:

By the first equation, Q is obviously some sort of function (the quantile function). H therefore operates on a function. By the second equation, the argument to H, which is called x, is used as the argument to f, which is the probability distribution function. f maps numbers to numbers, so x is a number. The types don't match.

So what am I supposed to make of this? Well, I'll try to follow the process and see if I can make sense of the result.

10 comments:

The first equation has an implicit "at p" (except that the variable name itself is omitted), so it says

Q''(p) = H(Q(p))*(Q'(p))^2

H is a function from numbers to numbers, and it is defined by the second equation. One might say that "H(Q)" in the first equation represented composition.

It's a very typical notation shift. Mathematicians call their functions f, g, Q... whereas physicists call them f(x), g(x), Q(x) and when there is need to evaluate at a point x_0 they write either

f(x=x_0) or f(x)|_{x=x_0} or simply f(x)|_{x_0}

Well Q is a function, but you could also call a function a vector in a Hilbert Space. And x can be a function (or vector) in a Hilbert Space too (a constant function if you wish). Then if f:H -> H rather than f: R->R then the notation wouldn't be a problem. I think math is weird/interesting because the further you go in courses the more everything just melds together as just examples of abstract objects.

"We can show that these functions are inverses of each other"

At this point, mathematicians reading the blog had their heads explode. We don't show inverses by anecdote!

@petehern

agreed. that wouldn't even pass for a heuristic :-)

can someone say composition of functions?

Function composition - through variable substitution :O

Even Eric Cartman knew that one :P

The post should instead have been titled "Why I don't like math notation".

A - An expression contains no relation (i.e. no =;<;>;<=;>=;etc...)

http://en.wikipedia.org/wiki/Expression_(mathematics)

B - An equation (including inequalities) contains some relation between two expressions.

http://en.wikipedia.org/wiki/Equation

C - A function associates a unique value to each input of a specified type. http://en.wikipedia.org/wiki/Function_(mathematics)

I think printing the following and sticking it to a wall is a must for all math wiz wannabe's:

http://en.wikipedia.org/wiki/Table_of_mathematical_symbols

The first is an equation, and the second is a function. 'x' is a variable, which represents a number. What do you mean by 'types?'

Post a Comment