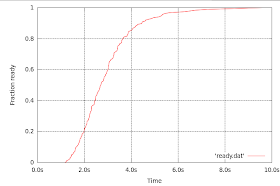

Last night I had an ‘Aha!’ moment. We can look at the distribution as the probability that a result will be ready when we poll (that's not the ‘Aha!’). But we can pretend that we made all the RPCs at exactly the same time. If we had, then this chart shows the fraction of RPCs that finish as a function of time (not a big ‘Aha!’, but I'll take it). So if we were to poll at 2 seconds, we'd find about 20% of the RPCs have completed, but if we wait until about 4.5 seconds we'd find 90% have completed.

What if we polled at 2 seconds and 4.5 seconds? Well, the 20% that completed before the poll at 2 seconds will be serviced right after that poll, but any that become ready between 2 and 4.5 seconds will have to wait until the later poll. That will be

90 - 20 = 70%. That is to say, the efficacy of the poll — the marginal increase in likelihood of getting a response — is proportional to the vertical difference in the graph divided by the time since the last poll. Or, to put it yet another way, the polling frequency should be proportional to the derivative of the distribution. Aha!An empirical measurement of the derivative would have a lot of noise. Fortunately, we noticed that the cumulative distribution is closely modeled by a cumulative log-normal curve. Cumulative means integral. The derivative is therefore simply a log-normal curve centered at the median time (about 3 seconds). (Is this an ‘Aha!’, or a ‘D'oh!’ moment?)

I think I have enough of an understanding of this problem to write some code.

You haven't told your readers how much it costs to poll. If polls are free, then just poll every microsecond until the data is available.

ReplyDeleteWhat you really want is the relative cost of a poll vs. the cost of delaying an extra second.

You are correct. The cost of polling is a critical part of the equation and I omitted it in this last post. In addition, I said nothing whatsoever about the cost of delaying an extra second. (Actually, those were parameters when I framed the problem as a puzzle.)

ReplyDeleteAs it turns out, the cost of delaying depends on how long you've delayed already. If you've waited 30 seconds, one more second doesn't matter much, but if you could get the answer in 1 second instead of 2, then the extra poll may be worth it.

In the particular application I'm working on this polling is one of the terms in the critical path that leads to a response to an end user. Shaving off the seconds really counts if the total time will be measured in seconds, but bandwidth, while cheap, isn't free.

Whatever the relative costs, it simply changes how we react to the distribution of response times. That is, regardless of any cost (provided a non-degenerate case, i.e. polling costs something), it is a waste of time to poll too early (or too late) and a waste of bandwidth to poll too frequently. What we learn qualitatively is that polling is most effective when the derivative of the cumulative distribution is large and less effective elsewhere.